What Are the Copyright Implications for Porn of Deepfakes? (AVN)

Read the full article by Mark Kernes at AVN.com

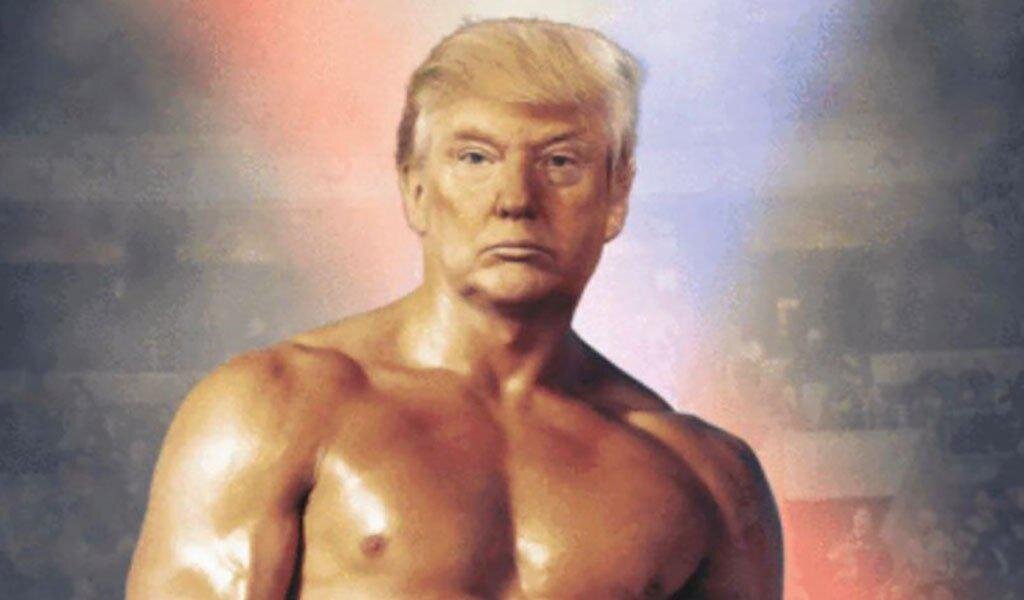

The technology for "deepfakes"—taking a person in an existing image or video and replacing them with someone else's likeness using computerized artificial intelligence—has been around since 2017, but its use is increasing and is expected to play a major role in the 2020 elections, as perhaps represented by the deepfake above of the Felon-In-Chief himself, Donald J. Trump, whose minions pasted his head on the body of Sylvester Stallone as boxer Rocky Balboa for Trump to tweet to his followers. In reality, Trump is just another balding, flabby septuagenarian, and not someone of whom kids would likely hang a poster in their bedrooms.

Perhaps the main problem with deepfakes, since they can be nearly impossible to discern with the naked eye, is that there may easily be many more of them out there than people might noticed. And according to some investigators, the vast majority of deepfakes—96 percent, by one estimation—are based on images and video of porn.

"As tech firms scramble to tackle the spread of deepfake videos online, new research has claimed 96 percent of such videos contain pornographic material targeting female celebrities," reported the Indo-Asian News Service. "The researchers from Deeptrace, a Netherland-based cybersecurity company, also found that top four websites dedicated to deepfake pornography received more than 134 million views."